Tag: Education

-

Philosophy faculty empower students to tackle ethical issues in data science

How would Aristotle approach Facebook’s methods? The future of data science is a mystery surrounded by concerns about autonomy, responsibility, and other ethical issues related to technology. The recent Facebook-Cambridge Analytica data scandal raised concerns of personal privacy, ethical principles for social media businesses, as well as misinformation.

In his single-credit PHIL 293 course “Ethics for Data Sciences”, assistant professor of philosophy Taylor Davis challenges students to re-think what they believe about morality in the wake of these controversies in science and technology.

In light of advancing technologies in artificial intelligence and machine learning, Davis said it is becoming more clear that ethical training is necessary for data science.

“Broadly what is happening, especially we see Mark Zuckerberg testify in front of Congress, is ethics pervades all of our lives as we become more reliant on it in many ways,” Davis said.

Partnered with the Integrative Data Science Initiative, faculty are planing to make the course three credits with an online version, said Matthew Kroll, post-doctoral researcher in philosophy, and even advance ethics through other initiatives.

“One of the questions I start the class with is ‘What does it mean to be ethical in the age of the big data?’” Kroll said. The students discuss current events like the Facebook Cambridge Analytica data scandal and individuals and organizations should have done.

Kroll has also previously taught the course by giving students a “tool kit” of philosophical methods of reasoning to address these issues as well as concerns of rights and autonomy with respect to artificial intelligence and autonomous cars.

“If you’ve never taken an ethics course, you at least get to dip your toes in the water of what ethics is,” Kroll said.

By teaching students how to form philosophical arguments and put issues in historical perspectives, Kroll hopes to teach students how to make better ethical decisions.

“If these students feel like in their professional lives they reach a moment or a threshold that, if there’s an ethical issue in their workplace like ‘Are we gonna sell data to a particular government interest?’ to at least say ‘I don’t think this is right’ or ‘I think this is an unethical use of user data,’” Kroll said. “If one, two, or three students do that, then I’ll feel like the class is a success.”

Through an applied ethics course, Davis emphasized philosophy runs in the background as he instructs. In contrast to the standard philosophy-based methods of beginning with general, theoretical principles, he delivers current events as case studies.

Davis created the course by substituted data science with engineering from an engineering ethics course.

With a case-study based bottom-up approach, students begin with real world problems then figure out how to reason with those actions in mind. From these methods of isolating actions from agents and forming arguments, they learn how ethical principles such as not causing unnecessary harm to others and respecting personal rights come into play.

Through these methods, students find truth and clarity on tricky issues that they may apply to other problems such as autonomy of self-driving cars and gene editing technologies, Kroll said.

“Machine learning algorithms perform calculations in opaque methods,” Davis said. “They create a complicated model that researchers need to understand.” Davis said this would let researchers determine how to resolve social inequality issues related to women and minorities or forming predictions on individual habits. He wants students to consider how similar people are to robots that may perform human-like actions and how that raises issues about personal agency.

“The idea is to give people that kind of training to see and identify ethical issues,” Davis said.

Students will continue to grapple with what it means to be human in the “age of big data”, Kroll said. “The ethics in data science instruction serves to empower students in their future careers.”

-

Data-based analysis reveals data science job prospects

With Purdue University’s Data Science Initiative emphasizing on students having the best skills necessary for jobs, here’s a data-driven look at what the job market will look like.

The job description data came from studies by Corey Seliger, Director of Integration and Data Platforms, ITaP and the web scraping service PromptCloud. The data sets that scraped job posting websites were obtained through the Purdue University Research Repository and the machine learning community Kaggle.

Though Purdue does not offer as many courses from their Data Science Initiative when compared to other schools, this is less of a statement about Purdue’s Data Science Initiative and more of what universities classify as “data science” courses. The interdisciplinary nature of Purdue’s Data Science efforts meant that many courses through various other departments were not counted as data science courses. This analysis did not include, for example, courses from biology or neuroscience departments that may be considered intensive with data analysis.

The Statistics Living-Learning Community’s publication and presentation record since their inception shows the influence the Data Science Initiative has had in getting students’ work published.

The majority of them are in big cities like Chicago and San Francisco. These cities show where the future data science jobs are likely to be. They can be classified with corresponding metrics of predictability.

More data science jobs require a Ph.D. when compared to other education qualifications of Bachelor’s degrees or Master’s degrees. 16,618 require a Ph.D, 10,695 required a Bachelor’s, and 8,759 required a Master’s. Over half of the jobs listed, 82,726 contained no educational requirements.

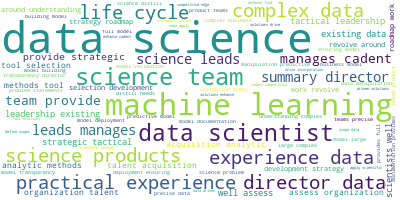

The associated wordcloud provides another glimpse into the words these job postings most commonly use. Though these words may be used frequently throughout the job postings, their importance to data science as a whole shows by their usage.

The wordcloud shows relative frequencies of each keyword from the job descriptions. Key phrases like “practical experience,” “complex data,” and “strategic tactical” show the types of skills data science jobs require. That jobs requiring these skills means students seeking data science careers should understand them for their career goals.

The data was taken from over 90,000 job postings of data science jobs. The job postings contained various information including salary, background, experience, and personal skills relevant to the job. They emphasized specific skills they look for in applicants and the types of experience required.

Latent Dirichlet allocation (LDA) is a generative statistical model to use unobserved groups that explain why parts of the data are similar to make observations. The LDA analysis of data science job prospects can be found here. With LDA, we observe how keywords or phrases cluster among themselves in groups of principal components. LDA has uses in Natural Language processing and topic modeling for large amounts of text. Modeling texts on various ways their keywords cluster can be used to predict books to recommend to someone based on their previous reading. Publications can model topics to extract key features from articles or cluster articles that are similar close together.

LDA involves estimating topic assignments for key topics in the text. For each document, an amount of topics is chosen and words are generated according to the Dirichlet distribution. Then the model works backwards to figure out how to find a set of topics and corresponding distribution that could generate the document’s content.

It determines how document topics vary among themselves, the words associated with each topic, and the phi value. The phi value is how likely a word belongs to a certain topic. The script, written in the python programming language, takes about 20 seconds to extract and analyze data while outputting results nearly 100,000 job descriptions. The speed of the pipeline came through programming techniques to take advantage of the the similarity between the input files. A multi-threaded procedure was used to run these tasks in parallel with one another and a search approach that saves memory by directly converting the file text to manageable data reduced the pipeline’s runtime from several hours to less than a minute.

Through various sampling methods, LDA can learn how to improve its method of determining the document’s content. We can train the LDA on various known sets of data to learn how to extract features and form predictions.

The process included parsing each file for keywords. This meant removing unnecessary words (such as “and” and “the”) as well as combining similar words into one another (such as “analysis” and “analyze”).

The LDA models can be further optimized for use in sampling such that predictions may be formed on future job prospects in data science. Tools to extract data science job information would prove beneficial for improving the LDA model. Education curricula can reflect these trends in a fluctuating job market. Future work can include finding more varied sets of data such as including machine learning and artificial intelligence keywords in addition to data sciences ones.

Using a support vector machine for classification and data-fitting methods training and testing, the models can be used as the basis for forming predictions on the data science job market. The entirety of these techniques can be found here: https://github.com/HussainAther/journalism/blob/master/src/python/postings/postingAnalysis.py.